However, feel free to experiment with the data you have available! The results of the system should scale quite well with the amount of data used for training.

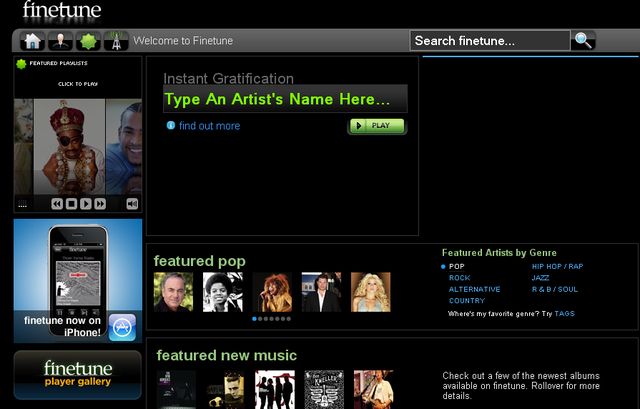

Training a model from scratch generally requires large amounts of training data if the audio domain to generate has substantial timbre diversity. Keep in mind that by default audio samples are required to be at least 47 s long ( -max_lat_len 512): if you require to encode shorter samples specify a lower value (the minimum is 256, corresponding to about 23 s, which is the length of samples used for training) both during encoding and during training. During training chunks are randomly cropped as a data augmentation technique. FineTune Productions is a company that works closely with animation, camera operating, video editing and more.

FINETUNE MAIAMI HOW TO

Musika encodes audio samples to chunks of sequences of latent vectors of equal length ( -max_lat_len) which by default are double the size of the chunks used during training. With over 20 years of aesthetic and plastic surgery experience, Linda knows how to make you look and feel your best. Python musika_encode.py -files_path folder_of_audio_files -save_path folder_of_encodingsįolder_of_encodings will be automatically created if it does not exist. Alternatively, specify -xla False (the speedup provided by XLA is not substantial).įirst of all, encode audio files to training samples (in the form of compressed latent vectors) with: 35 likes, 1 comments - The Gotham (thegotham) on Instagram: Fine tune your work with the help of expert industry mentors and The Gothams women and. See this link for the solution to this problem. Mixed precision is enabled by default, so if your GPU does not support it make sure to disable it using the -mixed_precision False flag.Īlso, you may experience errors when training using XLA ( -xla True by default for faster training) with CUDA locally installed in the environment. A training script for custom encoders/decoders will be provided!īefore proceeding, make sure to have a Nvidia GPU and CUDA 11.2 installed. Note that using the provided universal autoencoder will sometimes produce low quality samples, for example for samples that mainly contain vocals: training a custom encoder and decoder for a specific dataset would produce higher quality samples, especially for narrow music domains. Since training musika from scratch usually requires large amounts of training data, finetuning can represent a good compromise in some cases. A pretrained encoder and decoder are provided to produce training data (in the form of compressed latent sequences) of any arbitrary domain. Finetuning The fastest way to train a musika system on custom data is to finetune a provided checkpoint that was pretrained on a diverse music dataset. You can train a musika system using your own custom dataset. Aktualny obraz z kamery dostpny na zewntrznej stronie internetowej. 7 precise fitting sizes with opportunity to fine-tune. Miami Beach - Mount Sinai - Panorama 13 km. Python musika_generate.py -load_path checkpoints/misc -num_samples 10 -seconds 120 -save_path generations Training The Miami J Select cervical collar by ssur offers the widest range of height adjustability on.

0 kommentar(er)

0 kommentar(er)